Effects of submarine cables deployment on Internet routing: CAIDA wins Best Paper at PAM 2020!

April 21st, 2020 by Roderick FanouCongratulations to Roderick Fanou, Bradley Huffaker, Ricky Mok, and kc claffy, for being awarded Best Paper at the Passive and Active Network Measurement Conference PAM 2020!

The abstract from the paper, “Unintended Consequences: Effects of submarine cables deployment on Internet routing“:

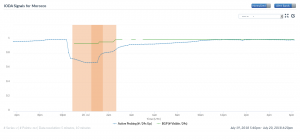

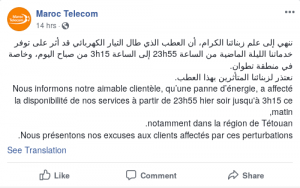

We use traceroute and BGP data from globally distributed Internet measurement infrastructures to study the impact of a noteworthy submarine cable launch connecting Africa to South America. We leverage archived data from RIPE Atlas and CAIDA Ark platforms, as well as custom measurements from strategic vantage points, to quantify the differences in end-to-end latency and path lengths before and after deployment of this new South-Atlantic cable. We find that ASes operating in South America significantly benefit from this new cable, with reduced latency to all measured African countries. More surprising is that end-to-end latency to/from some regions of the world, including intra-African paths towards Angola, increased after switching to the cable. We track these unintended consequences to suboptimally circuitous IP paths that traveled from Africa to Europe, possibly North America, and South America before traveling back to Africa over the cable. Although some suboptimalities are expected given the lack of peering among neighboring ASes in the developing world, we found two other causes: (i) problematic intra-domain routing within a single Angolese network, and (ii) suboptimal routing/traffic engineering by its BGP neighbors. After notifying the operating AS of our results, we found that most of these suboptimalities were subsequently resolved. We designed our method to generalize to the study of other cable deployments or outages and share our code to promote reproducibility and extension of our work

The study presents a reproducible method to investigate the impact of a cable deployment on the macroscopic Internet topology and end-to-end performance. We then applied our methodology to the case of SACS (South-Atlantic Cable System), the first South-Atlantic cable from South America to Africa, using historical traceroutes from both Archipelago (Ark) and RIPE Atlas measurement platforms, BGP data, etc.

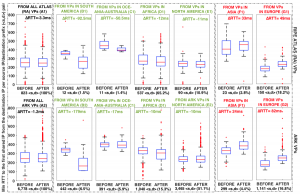

Boxplots of minimum RTTs from Ark and Atlas Vantage Points to the common IP hops closest to the destination IPs. Sets BEFORE and AFTER correspond to periods pre and post-SACS deployment. We present ∆RTT (AFTER minus BEFORE) per sub-figure. RTT changes are similar across measurement platforms. Paths from South America experienced a median RTT decrease of 38%, those from Oceania-Australia a smaller decrease of 8%, while those from Africa and North America, roughly 3%. Conversely, paths from Europe and Asia that crossed SACS after its deployment experienced an average RTT increase of 40% and 9%, respectively.

As shown in the above figure, our findings included:

- the median RTT decrease from Africa to Brazil was roughly a third of that from South America to Angola

- surprising performance degradations to/from some regions worldwide, e.g., Asia and Europe.

We also offered suggestions for how to avoid suboptimal routing that gives rise to such performance degradations post-activation of cables in the future. They could:

- Inform their BGP neighbours to allow time for changes

- Ensure optimal iBGP configs post-activation

- Use measurements platforms to verify path optimality

To enable reproducibility of this work, we made our tools and publicly accessible on GitHub.

Read the full paper on the CAIDA website or watch the PAM presentation video on YouTube.