BGPstream: a software framework for live and historical BGP data analysis

Wednesday, November 23rd, 2016 by kcOne of the three CAIDA papers presented at ACM’s 2016 Internet Measurement Conference this month punctuated years of work to develop an open-source software framework for the analysis of historical and real-time BGP (border gateway protocol, the Internet’s interdomain routing protocol) data. Although BGP is a crucial operational component of the Internet infrastructure, and is the subject of research in the areas of Internet performance, security, topology, protocols, economics, etc., until now there has been no efficient way of processing large amounts of distributed and/or live BGP measurement data. BGPStream fills this gap, enabling efficient investigation of events, rapid prototyping, and building complex tools large-scale monitoring applications (e.g., detection of connectivity disruptions or BGP hijacking attacks).

We released the BGPstream platform earlier in the year, and it has already served to support projects at three hackathons, starting with CAIDA’s First BGP hackathon in February 2016. Then in June 2016, John Kristoff led a project at NANOG’s first hackathon (June 2016); when he presented the project results he noted, “Ultimately, [BGPStream] was going to save us a tremendous amount of time because this provided us with an interface into routing data that CAIDA collects and aggregates from multiple places. That allowed us to build short pieces of code that would tie in pulling out information based on community tag or next hop address.” Most recently at the RIPE NCC IXP Tools Hackathon in October 2016, the Universal Looking Glass team based their analysis on BGPStream, and worked to add the BGP measurement data published by Packet Clearing House as a data source supported by BGPStream.

Other researchers have also already made use of BGPstream for Internet path prediction projects, including Sibyl: A Practical Internet Route Oracle (where they used BGPstream to extract AS paths for comparison against traceroute measurements), and PathCache: a path prediction toolkit.

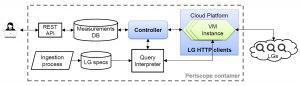

The IMC paper describes the goals and architecture of BGPStream, and uses case studies to illustrate how to apply the components of the framework to different scenarios, including complex services for global Internet monitoring that we built on top of it.

It was particularly gratifying to hear the next speaker in the session at the conference begin his talk by saying that BGPstream would have made the work he was about to present a lot easier. That’s exactly the impact we hope BGPstream has on the community!

The work was supported by two NSF-funded grants: CNS-1228994 and CNS-1423659, and the DHS-funded contract N66001-12-C-0130.