Archive for the 'Security' Category

Thursday, February 18th, 2016 by Josh Polterock

We set out to conduct a social experiment of sorts, to host a hackathon to hack streaming BGP data. We had no idea we would get such an enthusiastic reaction from the community and that we would reach capacity. We were pleasantly surprised at the response to our invitations when 25 experts came to interact with 50 researchers and practitioners (30 of whom were graduate students). We felt honored to have participants from 15 countries around the world and experts from companies such as Cisco, Comcast, Google, Facebook and NTT, who came to share their knowledge and to help guide and assist our challenge teams.

Having so many domain experts from so many institutions and companies with deep technical understanding of the BGP ecosystem together in one room greatly increased the kinetic potential for what we might accomplish over the course of our two days.

(more…)

Posted in Commentaries, Data Collection, Internet Outages, Measurement, Meetings, Routing, Security, Topology, Visualization | No Comments »

Monday, July 20th, 2015 by kc

I had the honor of contributing to a panel on “Cyberwarfare and cyberattacks: protecting ourselves within existing limitations” at this year’s 9th Circuit Judicial Conference. The panel moderator was Hon. Thomas M. Hardiman, and the other panelists were Professor Peter Cowhey, of UCSD’s School of Global Policy and Strategy, and Professor and Lt. Col. Shane R. Reeves of West Point Academy. Lt. Col. Reeves gave a brief primer on the framework of the Law of Armed Conflict, distinguished an act of cyberwar from a cyberattack, and described the implications for political and legal constraints on governmental and private sector responses. Professor Cowhey followed with a perspective on how economic forces also constrain cybersecurity preparedness and response, drawing comparisons with other industries for which the cost of security technology is perceived to exceed its benefit by those who must invest in its deployment. I used a visualization of an Internet-wide cybersecurity event to illustrate technical, economic, and legal dimensions of the ecosystem that render the fundamental vulnerabilities of today’s Internet infrastructure so persistent and pernicious. A few people said I talked too fast for them to understand all the points I was trying to make, so I thought I should post the notes I used during my panel remarks. (My remarks borrowed heavily from Dan Geer’s two essays: Cybersecurity and National Policy (2010), and his more recent Cybersecurity as Realpolitik (video), both of which I highly recommend.) After explaining the basic concept of a botnet, I showed a video derived from CAIDA’s analysis of a botnet scanning the entire IPv4 address space (discovered and comprehensively analyzed by Alberto Dainotti and Alistair King). I gave a (too) quick rundown of the technological, economic, and legal circumstances of the Internet ecosystem that facilitate the deployment of botnets and other threats to networked critical infrastructure.

(more…)

Posted in Commentaries, Meetings, Policy, Security | Comments Off on Panel on Cyberwarfare and Cyberattacks at 9th Circuit Judicial Conference

Friday, June 6th, 2014 by Josh Polterock

On 28-29 May 2014, DHS Science and Technology Directorate (S&T) held a meeting of the Principal Investigators of the PREDICT (Protected Repository for the Defense of Infrastructure Against Cyber Threats) Project, an initiative to facilitate the accessibility of computer and network operational data for use in cybersecurity defensive R&D. The project is a three-way partnership among government, critical information infrastructure providers, and security development communities (both academic and commercial), all of whom seek technical solutions to protect the public and private information infrastructure. The primary goal of PREDICT is to bridge the gap between producers of security-relevant network operations data and technology developers and evaluators who can leverage this data to accelerate the design, production, and evaluation of next-generation cybersecurity solutions.

In addition to presenting project updates, each PI presented on a special topic suggested by Program Manager Doug Maughan. I presented some reflective thoughts on 10 Years Later: What Would I Have done Differently? (Or what would I do today?). In this presentation, I revisited my 2008 top ten list of things lawyers should know about the Internet to frame some proposed forward-looking strategies for the PREDICT project in 2014.

Also noted at the meeting, DHS recently released a new broad agency announcement (BAA) that will contractually require investigators contribute into PREDICT any data created or used in testing and evaluation of the funded work (if the investigator has redistribution rights, and subject to appropriate disclosure control).

Posted in Commentaries, Data Collection, Meetings, Policy, Security, Top Tens | No Comments »

Monday, May 13th, 2013 by Alistair King

On March 17, 2013, the authors of an anonymous email to the “Full Disclosure” mailing list announced that last year they conducted a full probing of the entire IPv4 Internet. They claimed they used a botnet (named “carna” botnet) created by infecting machines vulnerable due to use of default login/password pairs (e.g., admin/admin). The botnet instructed each of these machines to execute a portion of the scan and then transfer the results to a central server. The authors also published a detailed description of how they operated, along with 9TB of raw logs of the scanning activity.

Online magazines and newspapers reported the news, which triggered some debate in the research community about the ethical implications of using such data for research purposes. A more fundamental question received less attention: since the authors went out of their way to remain anonymous, and the only data available about this event is the data they provide, how do we know this scan actually happened? If it did, how do we know that the resulting data is correct?

(more…)

Posted in Commentaries, Measurement, Security | 2 Comments »

Monday, April 15th, 2013 by Bradley Huffaker

[While getting our feet wet with D3 (what a wonderful tool!), we finally tried this analysis tidbit that’s been on our list for a while.]

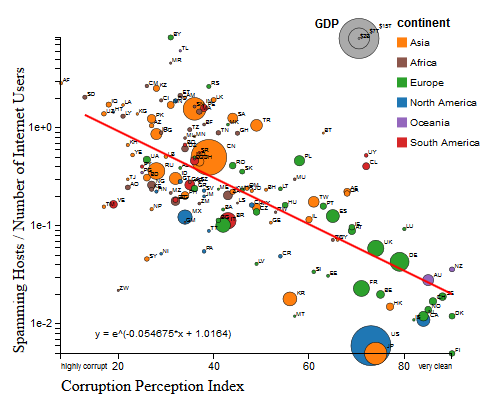

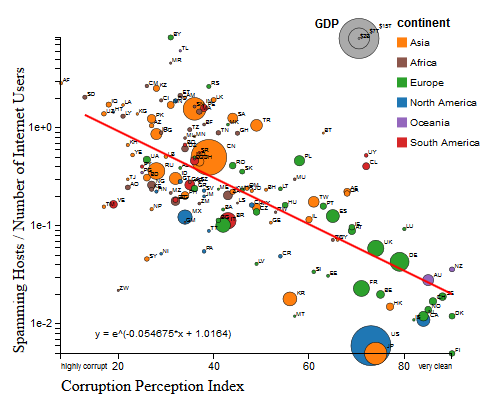

We recently analyzed the reputation of a country’s Internet (IPv4) addresses by examining the number of blacklisted IPv4 addresses that geolocate to a given country. We compared this indicator with two qualitative measures of each country’s governance. We hypothesized that countries with more transparent, democratic governmental institutions would harbor a smaller fraction of misbehaving (blacklisted) hosts. The available data confirms this hypothesis. A similar correlation exists between perceived corruption and fraction of blacklisted IP addresses.

For more details of data sources and analysis, see:

http://www.caida.org/research/policy/country-level-ip-reputation/

|

|

|

|

x:Corruption Perceptions Index

y:IP population % |

x:Democracy Index

y:IP population % |

x:Democracy Index

y:IP infection % |

Interactive graph and analysis on the CAIDA website

Posted in Commentaries, Economics, Policy, Security, Visualization | 1 Comment »

Wednesday, December 5th, 2012 by Alistair King and Alberto Dainotti

On the 29th of November, shortly after 10am UTC (12pm Damascus time), the Syrian state telecom (AS29386) withdrew the majority of BGP routes to Syrian networks (see reports from Renesys, Arbor, CloudFlare, BGPmon). Five prefixes allocated to Syrian organizations remained reachable for another several hours, served by Tata Communications. By midnight UTC on the 29th, as reported by BGPmon, these five prefixes had also been withdrawn from the global routing table, completing the disconnection of Syria from the rest of the Internet.

(more…)

Posted in Commentaries, International Networking, Internet Outages, Measurement, Security | No Comments »

Tuesday, December 4th, 2012 by Alberto Dainotti

Last week CAIDA researchers (Alberto and kc) visited National Harbor (Maryland) for the 1st NSF Secure and Trustworthy Cyberspace (SaTC) Principal Investigators Meeting. The National Science Foundation’s SATC program is an interdisciplinary expansion of the old Trustworthy Computing program sponsored by CISE, extended to include the SBE, MPS, and EHR directorates. The SATC program also includes a bold new Transition to Practice category of project funding — to address the challenge of moving from research to capability — which we are excited and honored to be a part of.

(more…)

Posted in Commentaries, Measurement, Security, Updates | No Comments »

Wednesday, March 23rd, 2011 by Emile Aben

Amidst the recent political unrest in the Middle East, researchers have observed significant changes in Internet traffic and connectivity. In this article we tap into a previously unused source of data: unsolicited Internet traffic arriving from Libya. The traffic data we captured shows distinct changes in unsolicited traffic patterns since 17 February 2011.

Most of the information already published about Internet connectivity in the Middle East has been based on four types of data:

(more…)

Posted in Commentaries, Future, International Networking, Internet Outages, Measurement, Routing, Security, Topology | 2 Comments »

Monday, May 4th, 2009 by kc

Stefan pointed me at a paper titled “Designing and Conducting Phishing Experiment” (in IEEE Technology and Society Special Issue on Usability and Security, 2007) that makes an amazing claim: it might be more ethical to not debrief the subjects of your phishing experiments after the experiments are over, in particular you might ‘do less harm’ if you do not reveal that some of the sites you had them browse were phishing sites.

(more…)

Posted in Commentaries, Policy, Review, Security | No Comments »

Sunday, April 5th, 2009 by kc

Update: In May 2015, ownership of Spoofer transferred from MIT to CAIDA

We are studying an empirical Internet question central to its security, stability, and sustainability: how many networks allow packets with spoofed (fake) IP addresses to leave their network destined for the global Internet? In collaboration with MIT, we have designed an experiment that enables the most rigorous analysis of the prevalence of IP spoofing thus far, and we need your help running a measurement to support this study.

This week Rob Beverly finally announced to nanog an update to spoofer he’s been working on for a few months. Spoofer is one of the coolest Internet measurement tool we’ve seen in a long time — especially now that he is using Ark nodes as receivers (of spoofed and non-spoofed packets), giving him 20X more path coverage than he could get with a single receiver at MIT.

(more…)

Posted in Commentaries, Routing, Security, Updates | 5 Comments »